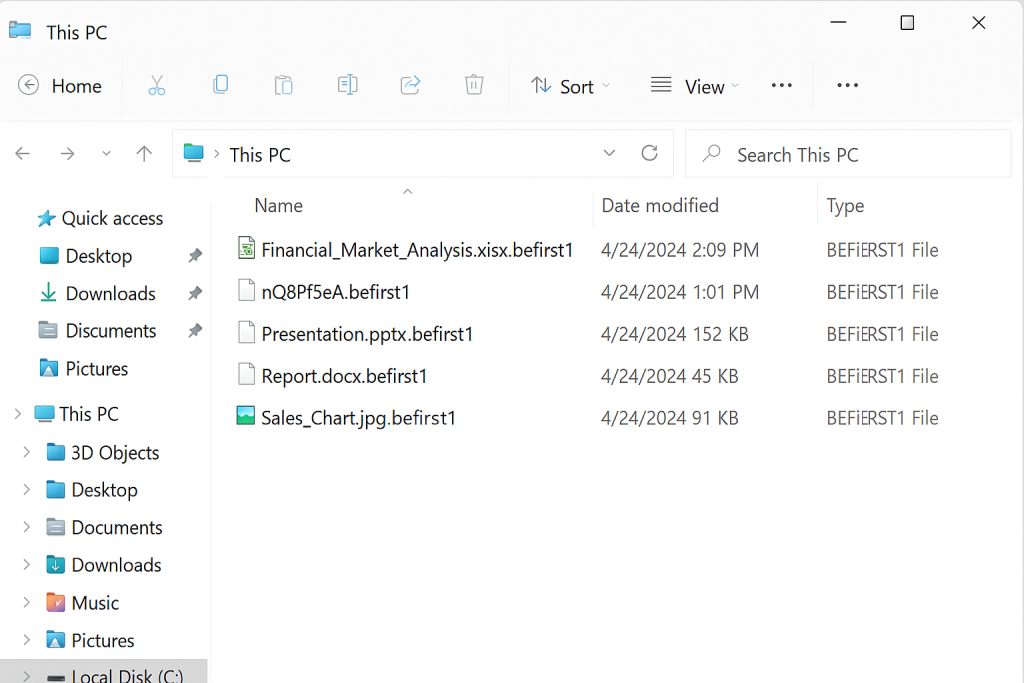

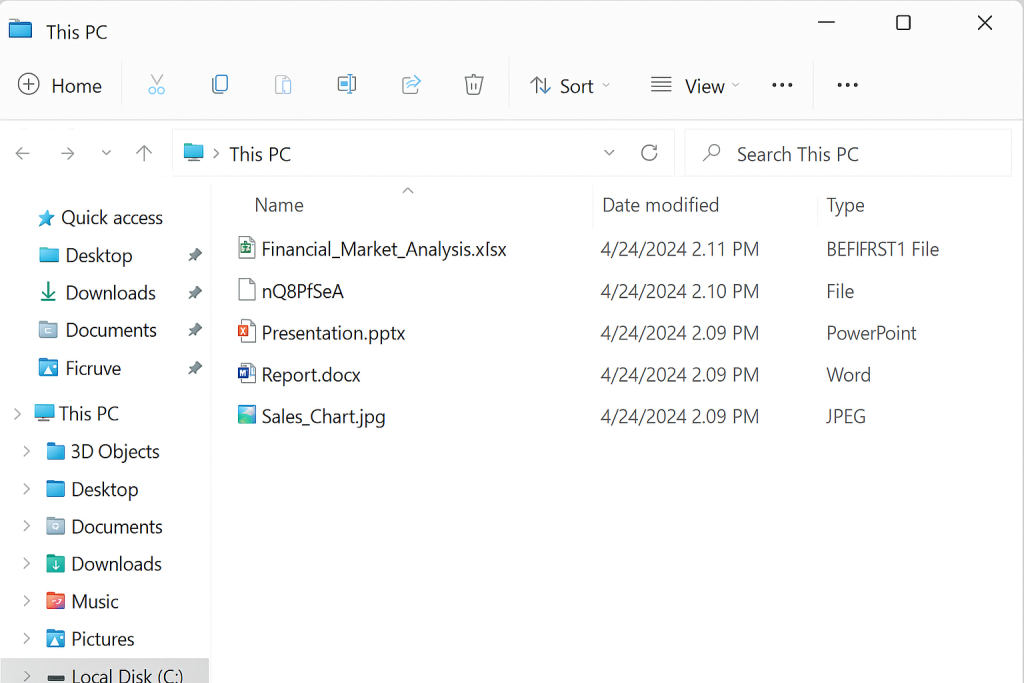

The incident started quietly in a mid-sized company in Laos. A few users said their apps were “stuck”, then shared folders stopped responding, and some line-of-business tools simply crashed on launch. When the IT team checked the main file and application servers, they found critical data renamed with a strange new extension like .befirst1, and ransom notes named READ_NOTE.html scattered across key directories. Very quickly, they realised this was BeFirst Ransomware, and the entire business network was being crippled in real time.

In these situations, the first 24 hours decide whether the company loses control or manages a structured, realistic recovery.

How BeFirst Ransomware Crippled the Business Network

BeFirst Ransomware did not just hit one server. It leveraged access to move across the internal network and encrypt shared resources used daily by sales, operations, and management.

As a result:

- Users could not access shared documents or project folders.

- Some internal applications failed because their data files were locked.

- Core business processes slowed down or stopped completely.

The company quickly shifted from a “small IT problem” mindset to a full-scale BeFirst Ransomware crisis that demanded immediate, organised action.

Immediate Containment in the First Hours

The first critical decision was to contain the attack instead of rushing into random fixes.

The IT team immediately:

- Disconnected affected servers and storage from the network.

- Disabled unsafe remote access, outdated VPN accounts, and unused admin logins.

- Instructed staff to stop accessing shared folders and not to run any “repair tools” found online.

- Preserved ransom notes and a small set of encrypted files as evidence and analysis samples.

By isolating systems quickly, they stopped BeFirst Ransomware from spreading further into endpoints, remaining file shares, and backup targets. The encrypted data stayed in a stable state, which later proved essential for proper assessment and recovery.

Giving Management a Clear Business View

Next, IT and operations created a short, plain-language briefing for management.

They focused on three key questions:

- What is broken right now? Several internal systems and shared data used for daily operations.

- What still works? Email, some cloud services, and external communication channels.

- What is at risk? Ongoing orders, billing, reporting, and customer satisfaction if the outage continues.

This clarity helped leadership stay calm. They approved a structured BeFirst Ransomware recovery effort, prioritising critical business functions instead of demanding “instant fixes” or pushing for immediate ransom payment.

Technical Assessment and Specialist Involvement

With containment in place, the team moved into technical assessment.

They mapped:

- Which servers, shares, and applications were encrypted.

- How backups were configured and where they were stored (including any offline copies).

- The approximate time window when BeFirst Ransomware activity began.

Encrypted file samples and relevant logs were collected and reviewed. The company then contacted specialist responders via FixRansomware.com and submitted samples securely through app.FixRansomware.com to confirm the BeFirst Ransomware variant, understand its behaviour, and evaluate viable recovery options.

For overall strategy, they also aligned with the official CISA Ransomware Guide (CISA/MS-ISAC Ransomware Guide), which emphasises isolating affected systems, analysing impact, and restoring from safe backups instead of blindly negotiating with attackers.

Building a Recovery Plan for the Network in Laos

Once they had more information, the organisation and the experts designed a BeFirst Ransomware recovery plan tailored to the business network in Laos.

Key elements included:

- Clone before touching production disks

The team created sector-level clones of affected storage volumes. All tests and potential decryption attempts took place on these clones, keeping original disks as a safety net. - Identify clean and trustworthy backups

Backup sets stored on separate devices and offline media were checked carefully. Clean copies from before the attack formed the baseline for restoring core services. - Restore in business-first order

Systems supporting revenue and customer obligations—such as order management, active project data, and essential internal documents—were restored first. Less critical archives were planned for later phases. - Rebuild recent data when needed

Where recent information was missing from backups, the team reconstructed it using email attachments, exports, and files from endpoints that had not been encrypted, documenting all manual changes for audit purposes.

Once restored systems passed integrity checks and basic functional tests, users were gradually reconnected, with monitoring in place to catch any anomalies early.

Lessons Learned from BeFirst Ransomware in Laos

In the end, the company recovered essential data and services without paying ransom. The BeFirst Ransomware incident revealed several important lessons:

- Business networks need layered backups, including at least one offline or immutable copy.

- Remote access and privileged accounts must be tightly controlled and reviewed regularly.

- Even a small incident response playbook dramatically improves reaction speed and reduces mistakes.

The attack showed how quickly BeFirst Ransomware can cripple a business network in Laos, but it also proved that a disciplined response—containment, clear communication, technical assessment, and expert-led recovery—can bring systems back and leave the organisation more resilient than before.