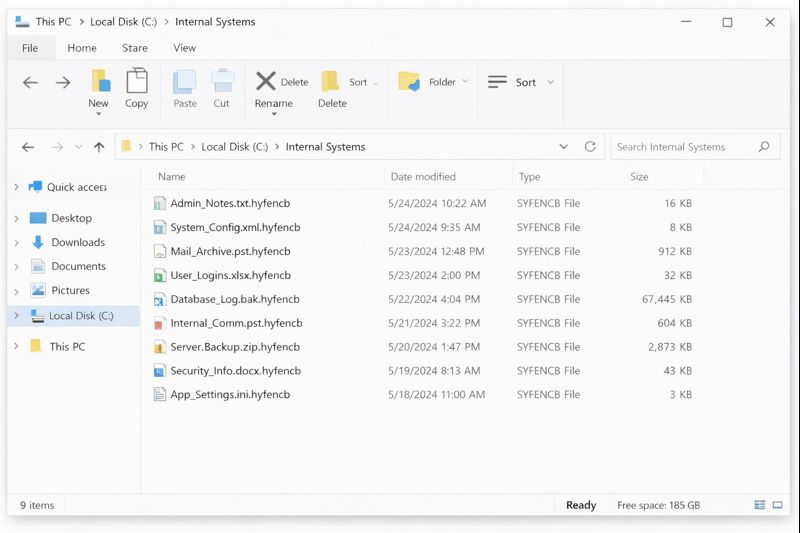

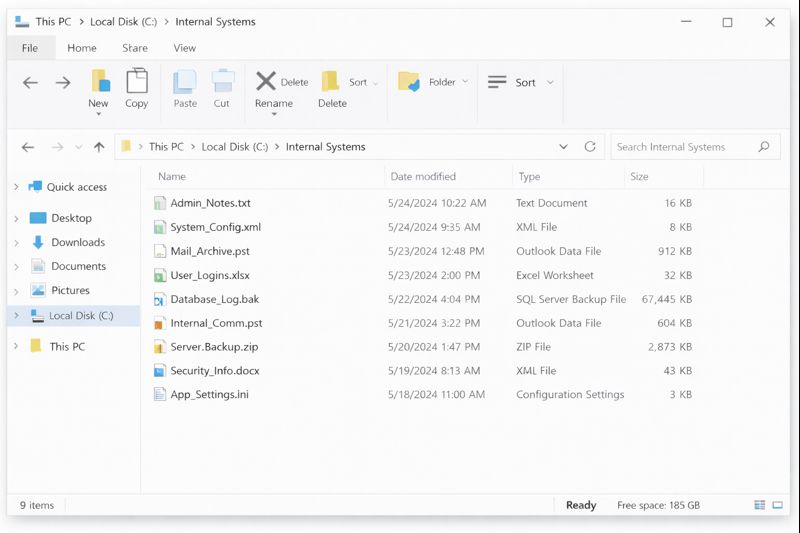

When staff in a Japanese enterprise started their shift, the only sign of trouble was that internal tools felt “slower than usual”. Within an hour, dashboards stopped loading, shared folders refused to open and several applications crashed at login. On core servers, the IT team found business data renamed with a new extension .hyfencb and a ransom note called WHAT_HAPPEN_OF_MYLIFE.html in critical directories. It was clear that HYFBTCLOCKER Ransomware had taken over internal systems and was choking the business.

From that moment, the difference between a long outage and a controlled recovery depended on the next 24 hours.

How HYFBTCLOCKER Ransomware Hit Internal Systems in Japan

HYFBTCLOCKER Ransomware did not just damage one file share. It targeted the systems that glued daily work together: internal web apps for approvals, shared folders with documents and reports, and data directories behind dashboards that management used every morning.

Email and some SaaS platforms still worked. However, the internal environment in Japan that supported day-to-day operations was heavily disrupted. A disciplined response became non-negotiable.

Containment Before “Fixing” Anything

The team knew they had to stop the bleeding first and avoid random experiments.

They quickly disconnected affected servers and storage from the production network, disabled non-essential remote access and old admin accounts, told staff not to touch encrypted files or install unofficial tools, and preserved logs plus a minimal set of samples for analysis.

Because of this, HYFBTCLOCKER Ransomware did not spread further into remaining servers, endpoints or backup targets that were still clean. The environment stayed broken, but stable enough to study and recover.

Giving Management a Clear Business View

Next, IT and operations prepared a short update for leadership.

They explained what was currently down (internal apps, shared folders and reporting data), what still worked (email, external services and customer-facing platforms), and what was at risk (order processing, approvals, financial accuracy and reputation in the market).

This framing helped management treat it as a serious HYFBTCLOCKER Ransomware incident, but not as the end of the business. They approved time, budget and outside help for a structured recovery instead of gambling on a quick ransom payment.

Technical Assessment and Specialist Support

With containment and alignment in place, the team started a structured technical assessment.

They mapped which systems and paths were encrypted, where original data lived (databases and a few external mirrors), and how backups were configured, especially any offline or offsite copies.

Encrypted samples and log extracts were then shared with specialist responders via FixRansomware.com, using the secure upload portal at app.FixRansomware.com. The goal was to confirm the HYFBTCLOCKER Ransomware family, understand realistic recovery options and avoid destructive trial-and-error.

For overall strategy, the team also used the official CISA Ransomware Guide on the StopRansomware portal which stresses isolation, analysis and restoration from trusted sources.

Bringing Internal Systems Back Online

Once impact and options were clear, the Japanese enterprise and its experts built a recovery plan focused on business value, not just file counts.

Key steps included:

- Cloning affected volumes before any tests so experiments ran on copies, not on original disks.

- Validating backups in an isolated environment and using clean restore points as the base for critical data and configs.

- Restoring systems in business-first order: core workflow tools, finance-related apps and key shared folders before secondary services.

- Reconstructing recent gaps using exports from SaaS systems, email attachments and files on unaffected endpoints.

- Testing end-to-end workflows before reopening access for larger groups of users.

Only when order creation, approvals and basic reporting worked reliably did the team allow normal traffic back to the restored internal systems.

Lessons from a HYFBTCLOCKER Ransomware Case in Japan

In the end, the enterprise brought internal systems and data back online without paying ransom. The HYFBTCLOCKER Ransomware incident delivered several practical lessons:

- Internal systems deserve layered, regularly tested backups, including at least one offline or immutable layer.

- Remote access and privileged accounts must be tightly controlled, monitored and reviewed.

- Even a short, written incident-response playbook greatly reduces confusion when a real attack hits.

This Japanese case shows how fast HYFBTCLOCKER Ransomware can take over internal systems. It also shows that disciplined containment, clear communication and expert-guided recovery through FixRansomware.com can safely bring data back and leave the organisation more resilient than before.